Explainable AI: Striking the Right Balance for Financial Services

Synechron Business Consulting Practice

Synechron Consultants,Amsterdam, The Netherlands

AI

The real risk with AI isn’t malice but competence. A super intelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble.

Stephen Hawking

English theoretical physicist, cosmologist and author

The financial services industry is going through a fundamental change. Technology is enabling firms to change the ways they operate and how they interact with customers, suppliers, partners and employees. The advancements in the use of artificial intelligence (AI) and machine learning (ML) is increasingly predominant throughout the financial services industry. AI is growing in its level of sophistication, complexity and autonomy, leading to great opportunities for business and society.

Along with the maturity of AI, new challenges arise such as explainability, accountability, and ethical standards. A key challenge is that many of the AI applications operate within ‘black boxes’, offering little insight into how they reach their outcomes. As a result, regulatory interest in AI is growing as is the need for AI to explain itself. In this article we examine general principles of the use of AI, stress the criticality of explainability and highlight the trade-off between prediction power and explainability.

The need for explainable AI

Artificial Intelligence is defined by the Financial Stability Board as “the theory and development of computer systems able to perform tasks that traditionally have required human intelligence”.

Currently, there are many applications of AI in the financial sector, both in front-end and in back-end business processes. Examples are chatbots, identity verification in client onboarding, fraud detection and many more. With the increase in applications, new challenges arise, such as the explainability of AI, which in simple terms means the ability to explain and understand why a decision is made.

A good example to illustrate the importance of explainable AI is a bank loan approval system that has been replaced by an algorithm. When someone has been rejected for a bank loan, there is a need to understand on what basis the algorithm decided to reject a client’s loan. The lack of transparency as to why a certain determination was made based on the input data is a key challenge within AI.

The concept of explainability is especially important in the financial sector, given that it is held to a higher societal standard than many other industries. Incidents with AI can have severe reputational effects for the financial system. And they can have a serious impact on the financial stability of market participants, consumers, and the sector as a whole. The past childcare allowances scandal in the Netherlands where about 8,500 Dutch parents were wrongfully accused of childcare allowance fraud by the tax and customs administration is a painful scenario that highlights the importance of preventing bias in algorithm outcomes. This incident once more illustrates the pressing need to understand and explain (both internally as well as externally) how AI influences processes and outcomes.

Implementing explainable AI

Given the increased use of AI in the financial services industry and its potential impact, financial regulatory authorities globally have become more actively involved in the debate.

The Dutch Central Bank (DNB) published a discussion paper on the general principles for the use of artificial intelligence in the financial sector. Synechron believes that the ‘SAFEST’ principles, as laid down by DNB in the discussion paper, encompass a much needed regulatory response to many challenges that are inherent in the development of AI.

Being able to understand and explain how AI functions in your organization is key to controlling the associated processes and outcomes. The SAFEST principles might help organizations to provide an adequate level of assessment as to how responsible AI can be implemented. The principles are divided over six key aspects of responsible use of AI, namely: (i) Soundness, (ii) Accountability, (iii) Fairness, (iv) Ethics, (v) Skills and (vi) Transparency.

The DNB’s principles suggest the following best practices:

- Soundness - All AI applications in the financial services sector should be reliable and accurate, behave in a predictable manner, and operate within the boundaries of regulations.

- Accountability - Firms should be accountable for their use of AI, as AI applications may not always function as intended and can cause negative consequences for itself, its customers or society.

- Fairness - Fairness is vital for society’s trust in the financial sector and it is important that financial firms’ AI applications do not disadvantage certain groups of customers.

- Ethics - To ensure customers’ trust, financial service providers must integrate ethical considerations into their AI deployment and development. In this way customers can trust that they are not being mistreated because of AI.

- Skills - When it comes to skills, employees should have sufficient understanding of the strengths and limitations of the AI-enabled systems they work with. In addition, board members should have the appropriate skills to oversee the risks and opportunities arising from AI.

- Transparency - Finally, transparency means that financial firms should be able to explain how and why they use AI in their business processes and how these applications function. The hope is that these principles should enable financial services enterprises to evaluate their individual AI deployment and can provide a baseline for strengthening the internal governance of AI applications.

Striking the right model balance

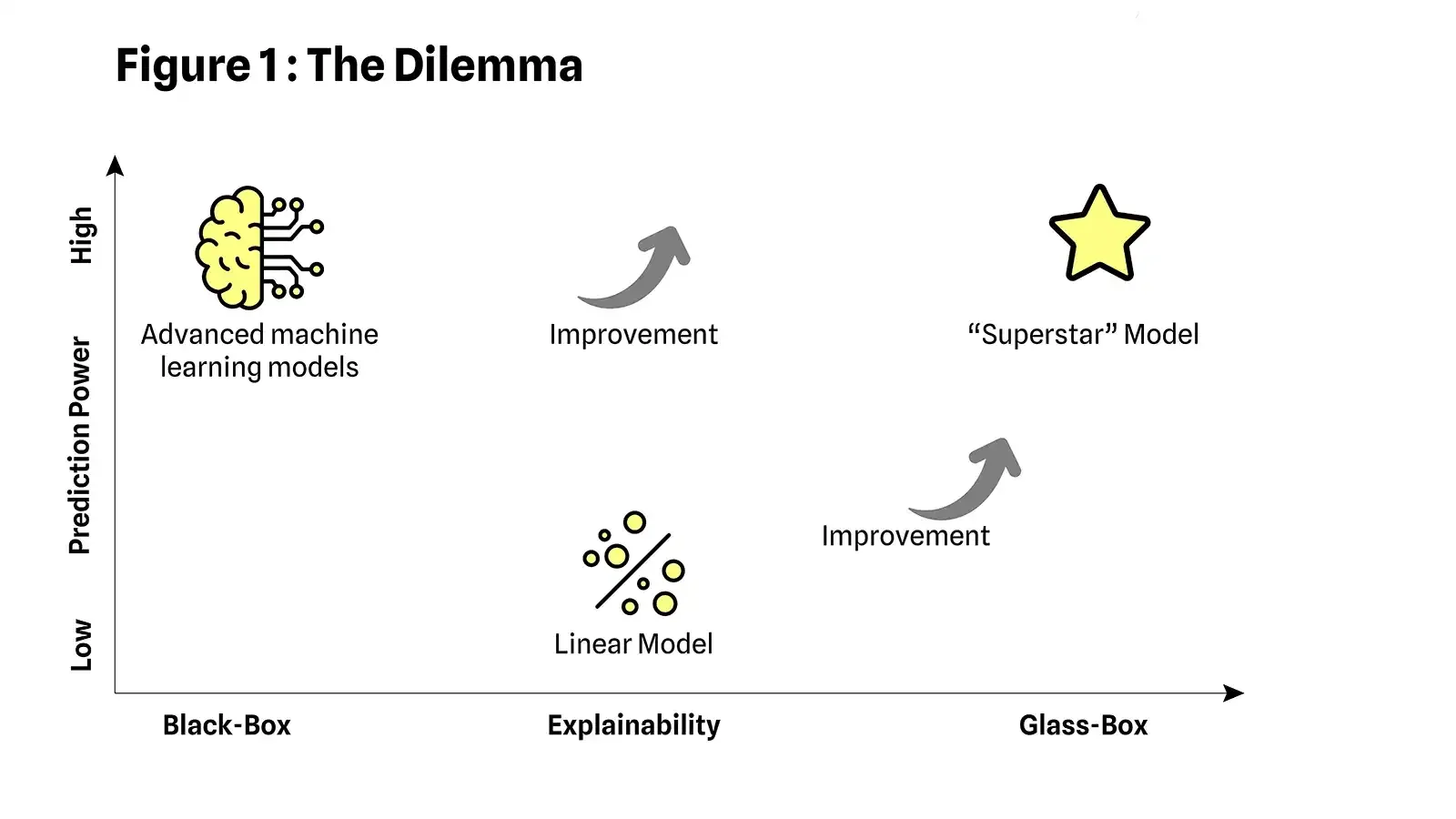

What we especially see in the market is the dilemma of balancing prediction power and explainability. Three types of models – which are a simplistic representation of reality - illustrate this trade-off:

- First, is the “Superstar”, or ideal model, which provides a glass-box solution with superior prediction power, being able to explain the outcomes without compromising on prediction power.

- Second, the advanced machine learning model brings good prediction power, but is hard to explain, and operates in the so-called ‘black-box’ realm.

- Thirdly, the linear model provides good explainability; however, this model can lack in prediction power.

Understanding model performance

There are two main approaches to reaching the “Superstar” model.

The first is aiming to improve the transparency of the accurate black-box models with the methodology of “Use AI to Explain AI.”

The second is improving the accuracy of the simple and explainable linear models through more feature engineering.

However, the room for improvement can be very limited depending on the use case. In more real-life terms, we use the following models to illustrate the trade-off between explainability and prediction power (complexity).

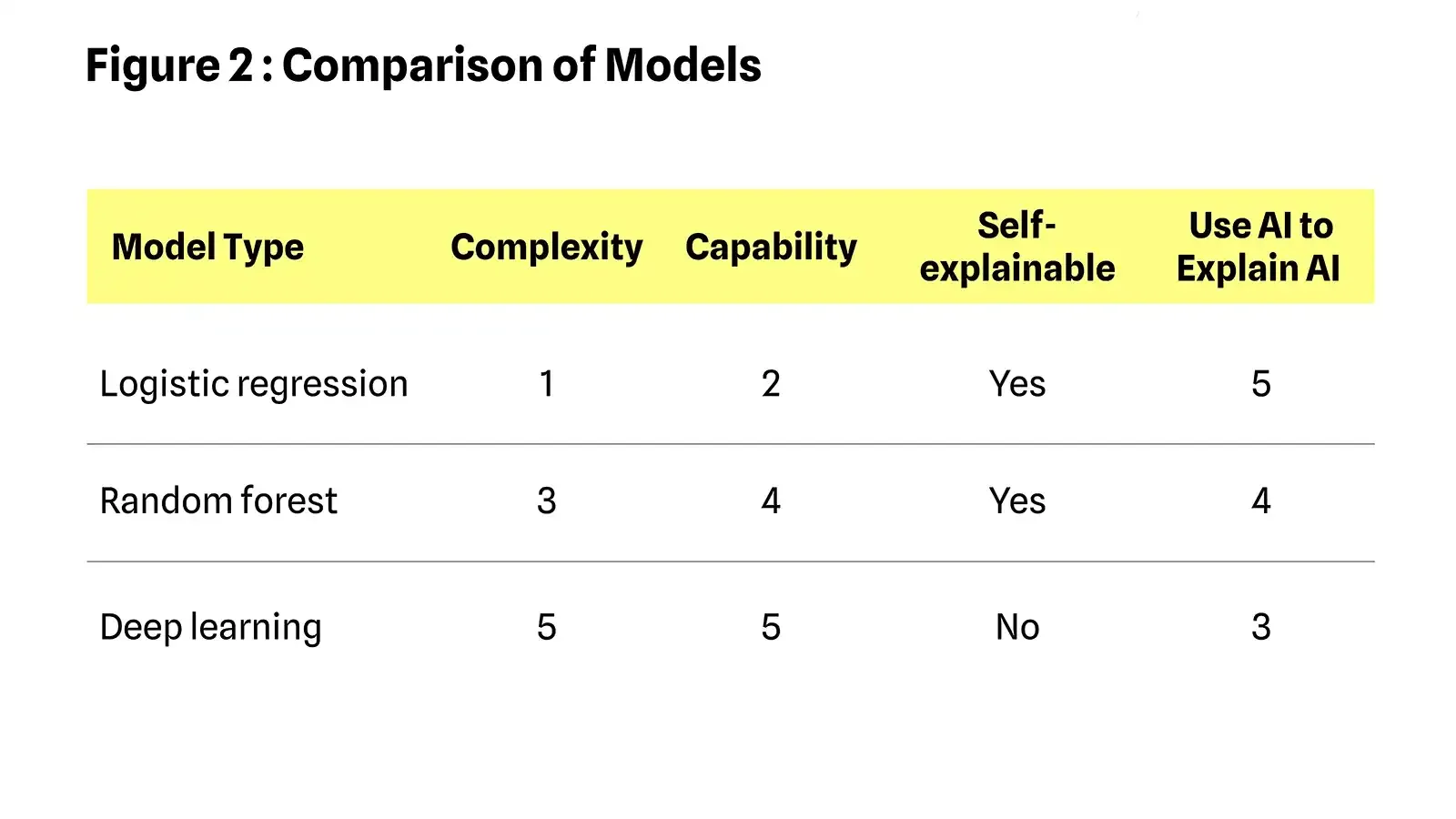

Model characteristics include:

Complexity = how the model is complex in terms of parameters and training process (1-5). Capability = ability to address complex problems (1-5). Self-explainable = can we understand the model’s decision directly from the model’s parameters. Use AI to Explain AI = how other techniques can be applied to reach explainability (1-5).

Simple vs. complex:

The simple models, such as logistic regression, are very good for simple tasks. They involve fast and simple training processes with an understanding of the model’s decision through parameters. For example, predicting if an email is spam or not. Therefore, logistic regression is ideal for tasks that are dealing with only numbers and a small number of features.

Moderate complex models, such as random forest, have more prediction power and can solve complex tasks. In general, these models can show good performance. Inspecting the importance score provides insight into which features are the most important and least important to the model when making a prediction. The downside is that the number of parameters can explode for complex tasks. Therefore, it can become very hard to track and understand the model’s decisioning.

Deep learning models have enormous capability to solve the most complex problems (e.g., text understanding, speech to text, image classification, and so on). The training process and parameters optimization is a very complex process and requires a lot of effort. Given the number of optimization parameters and their training process, it is not possible to directly derive model insights and understand predictions. There are some techniques that can address explainability. The approach of “Use AI to Explain AI” can help to improve explainability in AI solutions and unearth the “Why” in the model’s outcome. However, these are limited and depending on the use case.

Conclusion

The proposed principles by the DNB should enable financial services to evaluate their AI deployment and can provide a baseline for strengthening the internal governance of AI applications. Organizations must understand the potential benefits and risks and how they should be managed.

As illustrated in this article, reaching explainability is not always easy, there is a trade-off between prediction power and explainability; the better your AI algorithm prediction power, the less interpretable it becomes. The approach of “Use AI to Explain AI” can help to improve explainability in AI solutions and unearth why a certain decision has been made.

Moreover, incorrect AI outcomes can cause detrimental impact to financial institutions. Making sure that AI applications do not function as ‘black-boxes’ and being able to understand and explain (both internally as well as externally) how AI functions in your organization and impacts wider society is key to controlling the associated processes and outcomes.

Why Synechron?

Helping our clients with Digital Transformation

Our consulting services blend deep business and technology advisory capabilities, spanning a broad range of specialisms, from client lifecycle management and sustainable finance to data strategy and cloud adoption. We thrive on complex challenges in highly regulated environments and work hand-in-hand with our clients to align competing internal agendas and drive meaningful business outcomes.