United States EN

United States EN United States EN

United States ENBranislav Popović

branislav.popovic@synechron.com , Synechron

AI

In the race to integrate AI across critical business workflows, precision and adaptability must supersede brute utility. As enterprises increasingly rely on AI agents to perform complex tasks, the difference between effective and ineffective outcomes often comes down to one factor: context. The Model Context Protocol (MCP) represents a shift from simple tool execution to context-aware AI orchestration. However, the ecosystem’s maturity is uneven. While the MCP specification enables agents to discover and interact with tools, prompts, and resources, most clients today only support limited subsets of that vision. This article explores a practical evaluation of MCP usage against traditional direct tool calls, highlighting performance trade-offs, strategic considerations, and the emerging gap between protocol potential and client implementation.

Traditional AI deployments typically rely on statically connected tools. In such systems, an agent executes predefined tool calls based on a user query, with minimal context or flexibility. The Model Context Protocol introduces a new abstraction layer, exposing tools, reusable prompt templates, and structured resources as discoverable interfaces. When properly supported, this allows agents to reason over what they can access, decide how to proceed, and adjust their behavior dynamically. However, most MCP clients do not yet support automatic access to prompts and resources. Only a limited set of implementations, such as AgenticFlow, Claude Desktop App, MCPHub, and GitHub Copilot in VS Code, support these three modalities (tools, prompts, and resources). Many others are tool-only or require either user-triggered or application-triggered flows for context acquisition. The architecture is in place, but the operational bridge is still under construction.

To understand the practical impact of this architectural distinction, an experiment was conducted using a Python framework designed to connect AI models directly to tools, prompts, and resources via MCP. The setup included:

A stock analysis server with a tool for accessing mockup historical stock data

A prompt template for standardized performance analysis

A resource listing of available stock tickers:

This setup was compared against a more traditional tool-calling approach, where the model could access stock data tools but lacked direct awareness of prompts or resource availability. The experiment utilized GPT-4.1 as the large language model. Both systems were evaluated using six types of queries designed to test their handling of varying levels of complexity and uncertainty. These ranged from straightforward lookups to vague or invalid requests, providing a comprehensive measure of each system’s robustness and adaptability.

Simple queries involved clearly defined tickers and exact dates, making them easy to resolve through direct tool access. Ambiguous queries required interpretation of broader timeframes (e.g., "second quartal") or additional reasoning to align the input with valid data. Valid complex comparisons asked the model to analyze and compare multiple tickers over specific time windows, requiring contextual reasoning and multi-step synthesis. Invalid complex comparisons mirrored this setup but included one ticker with no available data, testing the model’s ability to gracefully handle partial failures. Invalid ticker queries directly referenced stocks with no available data, where the system was expected to recognize and explicitly communicate the absence of information rather than hallucinate or fabricate missing data. Lastly, vague/random queries required the model to select and analyze a random but available ticker, highlighting the benefit of resource awareness in context-rich environments.

| Scenario | MCP (Context-Rich) | Simple Tool Calling (Context-Limited) |

|---|---|---|

| Simple query | 2.12s (median 2.02s) / 434 tokens / 100% accuracy | 0.92s (median 0.85s) / 287 tokens / 100% accuracy |

| Ambiguous query | 14.25s (median 14.13s) / 1218 tokens / 100% accuracy | 5.13s (median 4.86s) / 2516 tokens / 100% accuracy |

| Valid complex comparison | 45.11s (median 45.89s) / 2577 tokens / 100% accuracy | 5.34s (median 4.56s) / 4006 tokens / 100% accuracy |

| Invalid complex comparison | 1.50s (median 1.46s) / 3519 tokens / 96% accuracy | 3.57s (median 3.05s) / 2192 tokens / 100% accuracy |

| Invalid ticker | 1.35s (median 1.33s) / 3939 tokens / 100% accuracy | 4.50s (median 4.41s) / 595 tokens / 40% accuracy |

| Vague / random stock | 10.90s (median 7.25s) / 4608 tokens / 100% accuracy | 2.94s (median 2.90s) / 470 tokens / 0% accuracy |

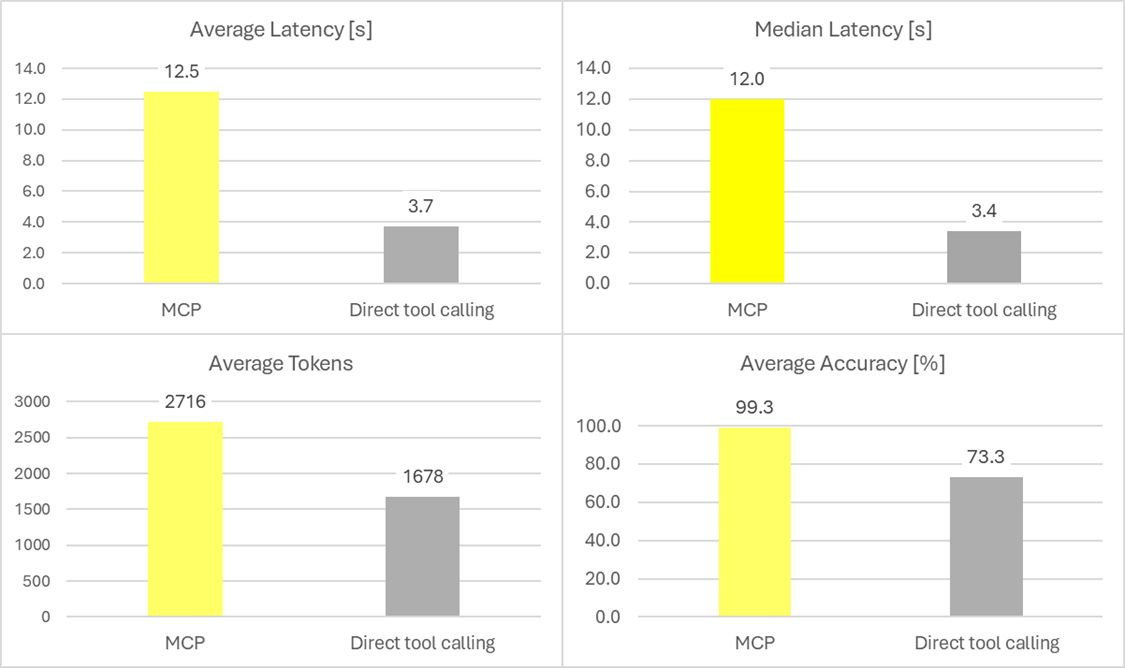

While direct tool calls were faster and used fewer tokens on average, they struggled with complex or underspecified queries.

In particular, vague and multi-entity questions exposed the limitations of tool-only integration. MCP performed better in terms of accuracy due to its ability to:

Recognize available tickers via a resource

Invoke a standardized prompt for consistent analysis

Chain tool responses through prompt-based summarization

The improved performance comes with a trade-off. MCP-based workflows often require multiple interactions: first, to query the list of available resources (e.g., valid tickers); second, to invoke a tool for retrieving relevant data; and finally, to apply a prompt template that guides how the model should analyze the data and structure its response. These additional steps explain the increased latency and token usage observed in MCP responses.

By contrast, direct tool calling operates without this layered context. The model simply invokes a predefined function to retrieve data, assuming the data exists, without the ability to check availability in advance. It also lacks access to reusable prompt templates that could guide how to interpret or present results. As a result, it frequently fails to complete complex or comparative queries accurately, especially when the request involves missing data, vague timeframes, or unclear expectations. In scenarios where both tickers were valid and data was available, the differences in accuracy were smaller. Minor discrepancies in these cases are likely attributable to inherent model variability, as a language model was used to validate and assess each generated response.

Despite the protocol’s design, most MCP clients today do not auto-load prompts or resources without explicit user interaction. This presents a strategic challenge: building AI that "understands its environment" is only possible if the runtime client enables that visibility.

According to modelcontextprotocol.io/clients:

Full protocol support (tools, prompts, and resources) is rare.

Most clients (e.g., AgentAI, Amazon Q IDE, ChatGPT, Warp) support tools, but not prompt/resource awareness.

Resource usage often requires user approval or custom UI/UX elements for sampling, which are not standardized across environments.

In short: the protocol supports dynamic context, but the clients often do not.

Enterprise teams looking to deploy MCP-based systems must treat client capabilities as a design constraint, not an implementation detail. Building around this constraint requires a few key strategies:

Choose clients based on feature completeness, not just brand or interface. Prioritize those that support automatic prompt/resource resolution if contextual accuracy is critical.

Design fallback workflows: When prompts or resources are not auto-available, ensure tool call outputs include minimal context (e.g., embedding ticker validation) to prevent model missteps.

Deploy internal clients with enhanced MCP support where needed, particularly in domains like compliance, finance, or legal analysis, where ambiguity is common and context is paramount.

Govern and version prompt and resource definitions with the same rigor applied to APIs or backend code.

With the added flexibility of context-aware AI comes added responsibility. Resource auto-discovery and prompt chaining can introduce new vectors for misuse:

Prompt injection via compromised templates

Over-disclosure from sensitive resources

Sampling abuse if resource availability is overexposed

MCP provides tools to mitigate these, including URI scoping, secure transport mechanisms (e.g., stdio or HTTPS), and explicit user approval flows for resource access. Proper use of the protocol’s safety features is essential in enterprise environments.

The Model Context Protocol offers a compelling path forward for building adaptable, intelligent, and reliable AI agents, but only when the surrounding ecosystem supports its full capabilities. While tools are widely accessible through MCP today, prompts and resources often are not. This gap between protocol design and client behavior limits real-world value unless addressed intentionally.

Context-aware AI will not emerge by accident - it must be architected. The decision to integrate MCP must go beyond protocol adoption and include a clear-eyed view of client capabilities, fallback design, and governance models. Only then can organizations unlock AI systems that act with understanding, not just execution.