Canada EN

Canada EN Canada EN

Canada ENAI

The last two years were about experiments. Organizations launched proofs of concept with generative AI, moved selected workloads to cloud and refreshed their internal narratives around “digital transformation.”

This outlook is not a catalog of technologies. It is a map of where we see investment, innovation and pressure converging in our global work with banks, insurers, asset and wealth managers, payments providers and supporting market infrastructures.

By 2026, the tone has changed. Boards and regulators are asking different questions:

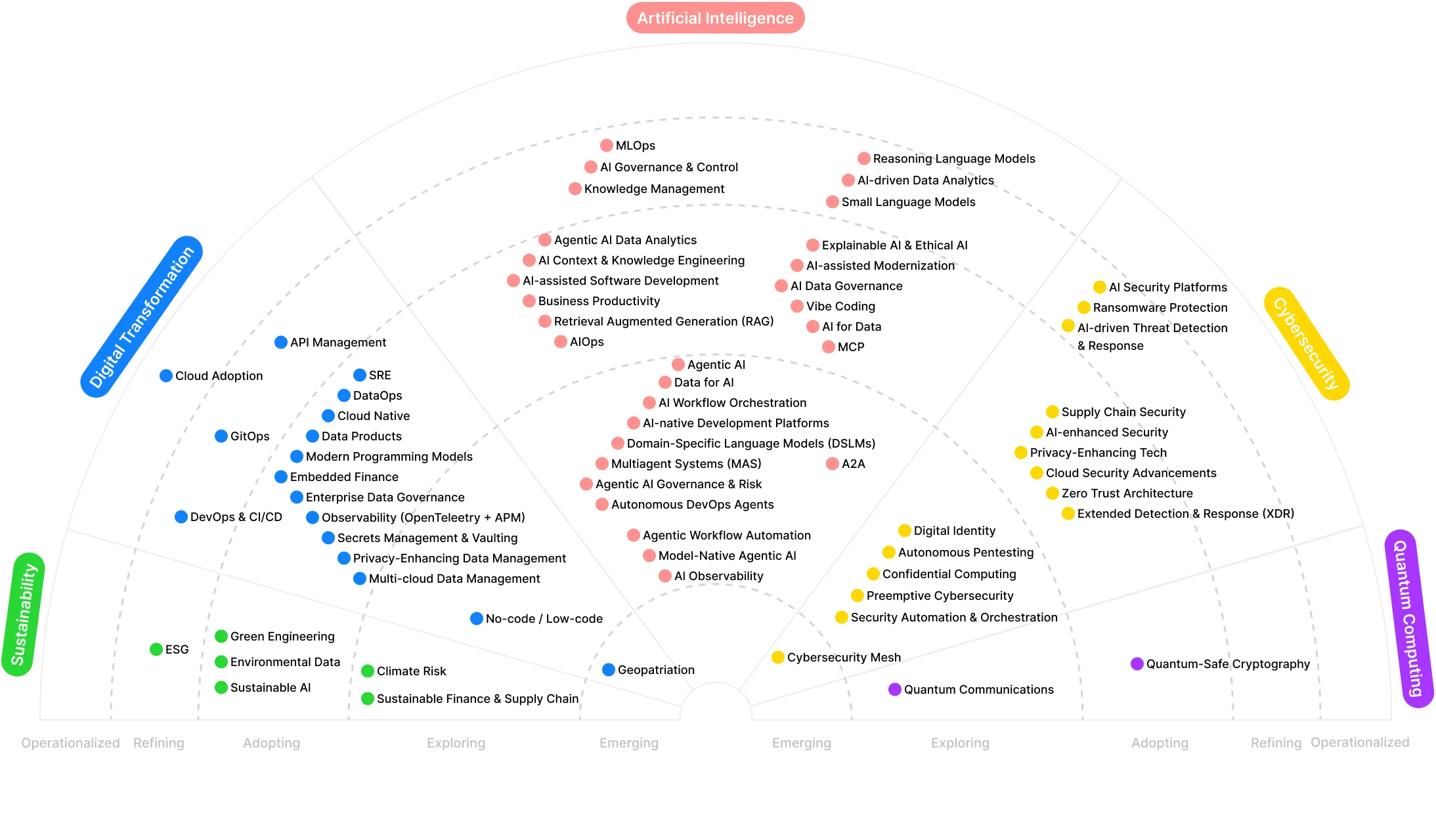

Across our global financial services work, six themes repeatedly surfaced as decision points for CIOs, CTOs, CISOs and heads of business:

These trends are not independent. Agentic AI presumes a certain level of cloud maturity. AI security is impossible without architectural visibility. Green engineering will increasingly shape what “good” looks like for AI workloads. Quantum-safe cryptography intersects directly with core modernization and long-lived financial instruments.

They also reflect several cross-cutting dynamics we observe across institutions. Clients are no longer satisfied with dashboards. They want systems that take bounded, auditable action on their behalf. Fragmented systems and legacy integration patterns continue to act as hidden constraints, limiting what can be automated or secured no matter how advanced the AI model.

Shadow AI, model sprawl and cloud instances left running after pilots show how governance is still catching up with early experimentation. Energy and efficiency, once peripheral considerations, are becoming strategic variables as AI workloads scale. And regional divergence creates global pressure for institutions operating across markets.

This outlook is grounded in:

Client demand signals

The briefs we receive, RFP themes and projects delivered across global banking, capital markets and insurance.

Delivery and architecture patterns

Where modernization work is actually being funded: what gets refactored, what gets containerized, what is wrapped vs rebuilt.

Synechron FinLabs accelerators and experiments

More than 100 accelerators developed over eight to 10 years, used as a lens into emerging use cases that move from “demo” to “deployment.”

Interviews with our Experts

In AI, cybersecurity, digital transformation and software engineering, covering both success stories and stalled initiatives.

We evaluate each trend across four dimensions:

We also distinguish between:

“AI for X” – using AI to augment an existing function (for example, security operations).

“X for AI” – adapting that function so AI itself is governed, secured and sustainable (for example, security for AI models).

What It Is

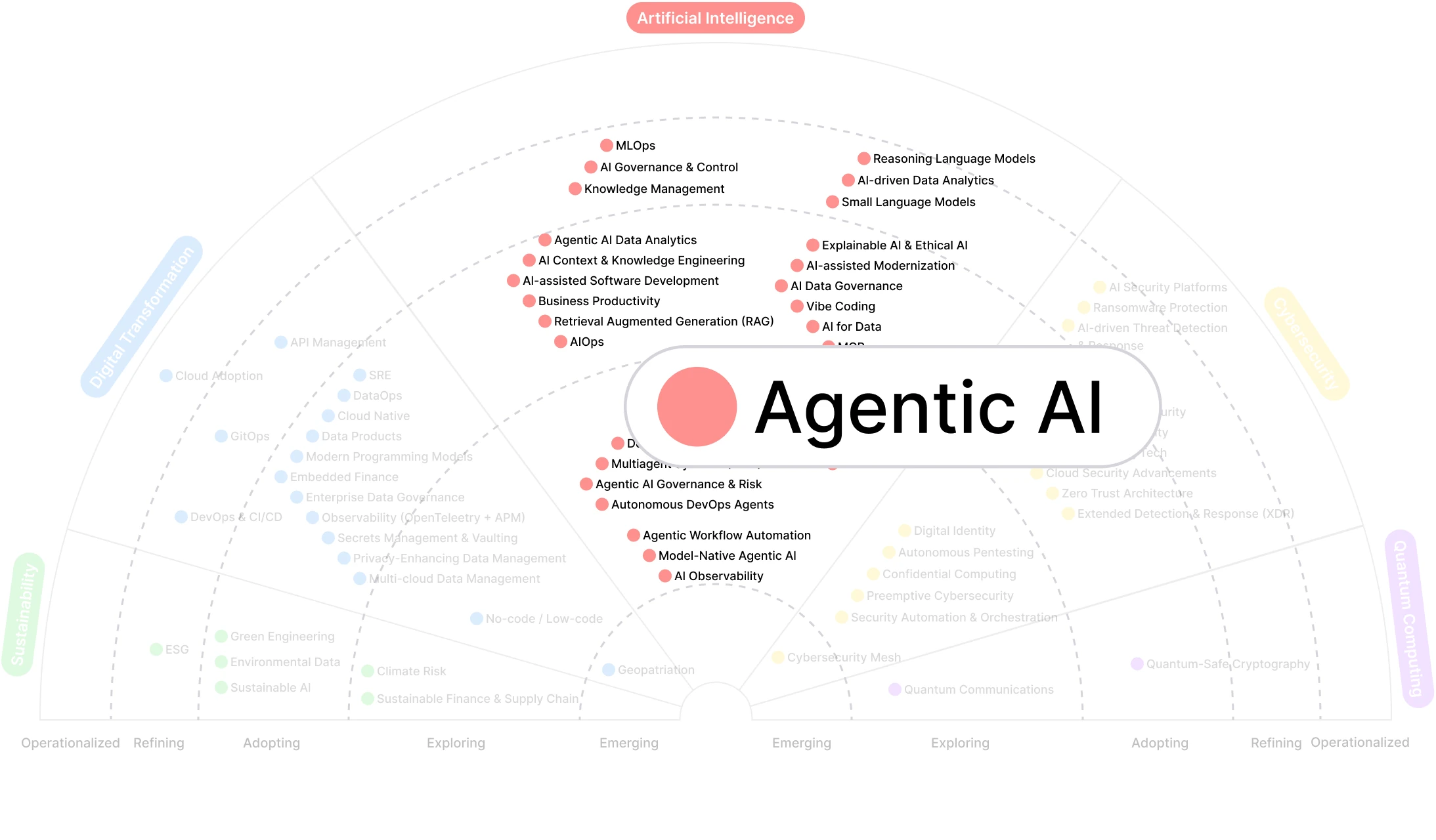

Agentic AI refers to systems that do more than answer questions or generate content. They can decide what to do next, orchestrate tools and data sources and execute multistep plans toward a goal within defined constraints.

Today, most so-called “agents” are still advanced copilots: they check facts, provide grounded responses and support humans in making decisions. By the end of 2026, we expect more systems that own parts of the decision loop in controlled environments.

Many institutions are beginning to build AI-native developer platforms that provide consistent environments for testing, integrating and governing agentic systems. These internal platforms bring together model hosting, routing, evaluation tooling, prompt libraries and secure connections into enterprise systems.

They provide a structured space where developers and architects can design, test and validate agent behavior with guardrails. They also reduce fragmentation by giving teams shared patterns for building and deploying agents, which shortens development cycles and creates more predictable operational outcomes.

Why It Matters in 2026

For financial institutions, the promise of agentic AI lies in:

The risk: deploying agentic systems into brittle architectures or ambiguous ownership models, where it is unclear who is accountable when an autonomous step goes wrong.

Where Momentum Is Showing Up

Key Uncertainties

Big Questions for Leaders

Synechron Vantage Point

We see two realities simultaneously:

Most “agentic” deployments today are fact-checking copilots with human-in-the-loop approvals.

However, the next wave of client demand is clearly oriented toward systems that can own more of a workflow, from drafting a response to opening tickets, calling APIs and closing tasks.

Our own accelerators have moved from showcasing techniques (for example, retrieval-augmented generation in Amplify pitchbook generation) to designing business-relevant agent flows that sit on top of existing systems and are realistic about adoption and governance constraints.

What It Is

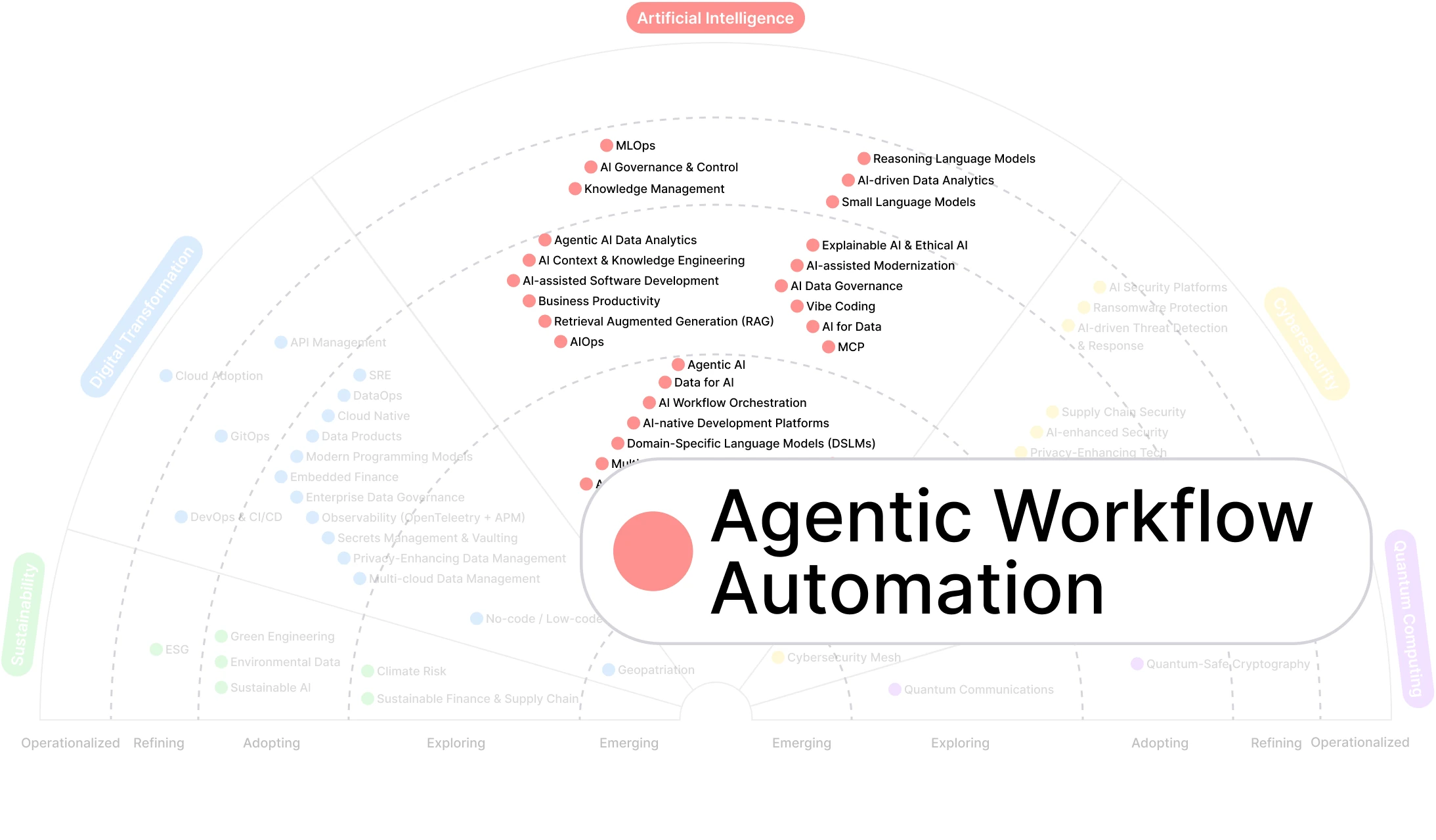

Agentic workflow automation applies agentic AI directly to business processes: email triage, case routing, document assembly, meeting scheduling, basic approvals and beyond. Instead of a human pushing work through a sequence of tools, an AI-driven orchestrator handles the routine steps and escalates exceptions.

Why It Matters in 2026

The majority of knowledge-work time in financial institutions is still absorbed by:

Agentic workflow automation can:

General-purpose models are improving, but financial institutions continue to see stronger performance from models trained on domain language and regulatory context. These models handle specialized vocabulary, structured financial data and compliance constraints more accurately. They also reduce hallucination risks and produce outputs that align more closely with internal standards and product definitions.

In practice, domain-specific models allow agentic systems to draft higher-quality client communications, generate more accurate documentation and support decisions with fewer corrections. Institutions that combine agentic orchestration with models grounded in financial-language realities achieve faster adoption and lower operational friction.

Where Momentum Is Showing Up

Key Uncertainties

Big Questions for Leaders

Synechron Vantage Point

Our experience suggests a two-phase approach. First, start with the basics: email, scheduling, simple document workflows and internal knowledge access, giving every employee an AI layer on top of existing tools. Second, deepen into core business processes where the value and readiness justify more complex orchestrations.

In client work, we see the most durable wins where agentic automation is paired with strong product management and UX design, not treated as a technical add-on.

What It Is

AI-driven threat detection and response refers to the use of AI to augment security operations, from anomaly detection and vendor-risk analysis to automated remediation, alongside efforts to secure AI itself (models, data flows and usage).

We distinguish between:

AI for security – using AI to strengthen defenses.

Security for AI – securing the AI stack, from models and data to prompts and outputs.

Why It Matters in 2026

Security teams face:

AI-driven tooling can help:

At the same time, unmonitored AI usage and unprotected models create new attack surfaces that many organizations have barely begun to manage.

Many organizations are expanding security practices to include end-to-end provenance for data and model outputs. Provenance controls track how data enters, moves through and leaves AI systems. They also record which models, versions and prompts contributed to a decision or generated a specific output.

This level of lineage is becoming a foundational requirement for auditability, especially as institutions automate more steps in security operations and business workflows. Clear lineage makes it easier to validate decisions, investigate anomalies and demonstrate compliance to regulators. It also supports safer agent deployments by ensuring that actions can be traced back to their origins.

Where Momentum Is Showing Up

Today, AI in security is primarily used for:

Adoption has stalled for many because CISOs are now asking a different question:

Once AI identifies a threat, what can it safely do about it?

Tools like cloud security posture management platforms are beginning to add visibility into AI model usage, for example scanning cloud environments for models and usage patterns, but the governance frameworks are still emerging.

Key Uncertainties

Big Questions for Leaders

Synechron Vantage Point

From our work and internal practice:

Many organizations are AI aware in security but adoption remains limited to summarization and prioritization tools. Higher adoption will require clear, tested patterns for closing the loop with action.

Security teams are still working to unlearn traditional paradigms and learn the full AI stack, from business processes using AI to architecture and model security.

Synechron is building AI governance frameworks, updating security training to include AI topics, deploying internal accelerators to augment our own security operations and modernizing security tooling to keep pace with AI-driven workloads.

What It Is

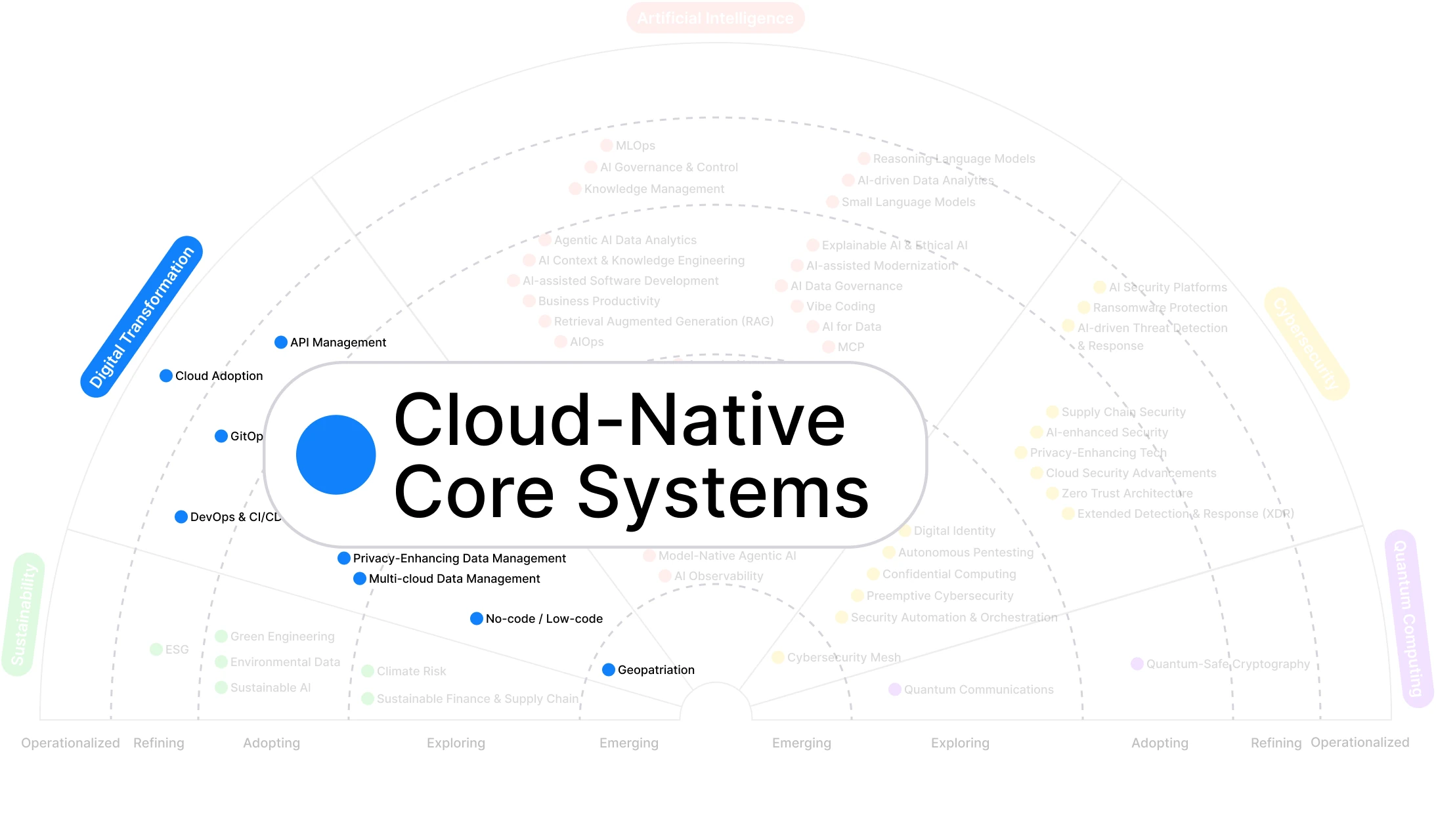

Cloud-native core systems are built to run in cloud environments using microservices, containers, modern APIs and DevOps practices. For financial institutions, cloud native increasingly means re-platforming or refactoring fragmented internal systems into coherent, scalable, access-controlled ecosystems, often on private or sovereign cloud.

Why It Matters in 2026

Many banks still manage key processes across many internal systems:

Cloud-native modernization allows institutions to:

Client demand remains focused on operational excellence: lower internal costs, more efficient staff and better digital experiences. Cloud is not an end in itself, but a necessary enabler.

Where Momentum Is Showing Up

Private and sovereign cloud are common models in financial services, reflecting sensitivity around data and regulatory constraints. Cloud instances must often be physically located within national borders, for example, Canadian workloads staying within Canada.

Institutions that once treated cloud as an experiment now routinely build new internal applications cloud-ready, even if some workloads remain on-premise until security teams give a green light.

Regional digital strategies differ:

In the Middle East, digital work is mobile first and tightly integrated with national digital identity systems.

In North America, more effort is aimed at internal productivity and product design, UX and front-end development.

Key Uncertainties

Regulatory evolution

How will rules around data residency, critical infrastructure and AI workloads on cloud evolve?

Integration costs

How far should institutions go in refactoring versus wrapping legacy systems?

Cloud economics

Many organizations moved to cloud expecting cost savings that did not materialize due to operational practices (for example, instances left running).

Big Questions for Leaders

Synechron Vantage Point

Our engagements consistently surface the same patterns. The first mandate is rarely “move to cloud.” It is “solve this fragmented process and make people’s lives easier,” cloud-native architectures are the best tool available.

We differentiate by bringing brains, not bodies, probing into API design, middleware, deployment patterns, performance and load from the first discussions, often raising questions clients have not yet considered.

FinLabs allows us to put working prototypes into clients’ hands, turning cloud-native and AI possibilities into tangible experiences.

What It Is

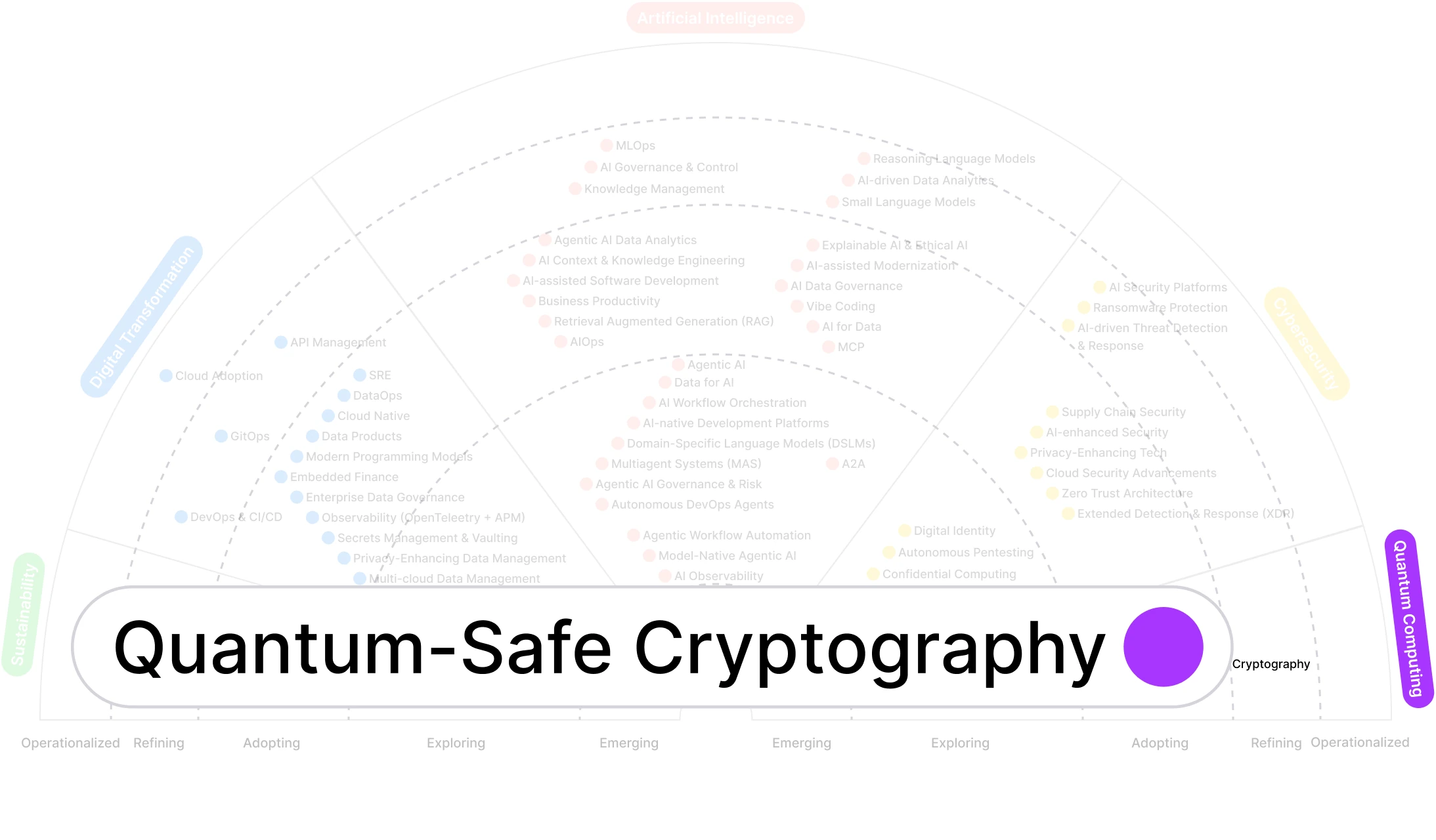

Quantum-safe (or post-quantum) cryptography encompasses cryptographic algorithms designed to be secure against both classical and quantum attacks. While practical, large-scale quantum computers capable of breaking today’s widely used schemes are not yet in production, financial institutions must consider the long lifetime of data and instruments they manage.

Why It Matters in 2026

Financial services handle:

Data encrypted today may be harvested and stored for decryption once quantum capabilities are available (“harvest now, decrypt later”). This makes quantum-safe planning relevant now, not in a hypothetical future.

Where Momentum Is Showing Up

For many institutions, quantum-safe cryptography is less about immediate algorithm swaps and more about governance and inventory: understanding what must be protected, where and for how long.

Key Uncertainties

Big Questions for Leaders

Synechron Vantage Point

In our work modernizing core systems and security architectures, we find that:

What It Is

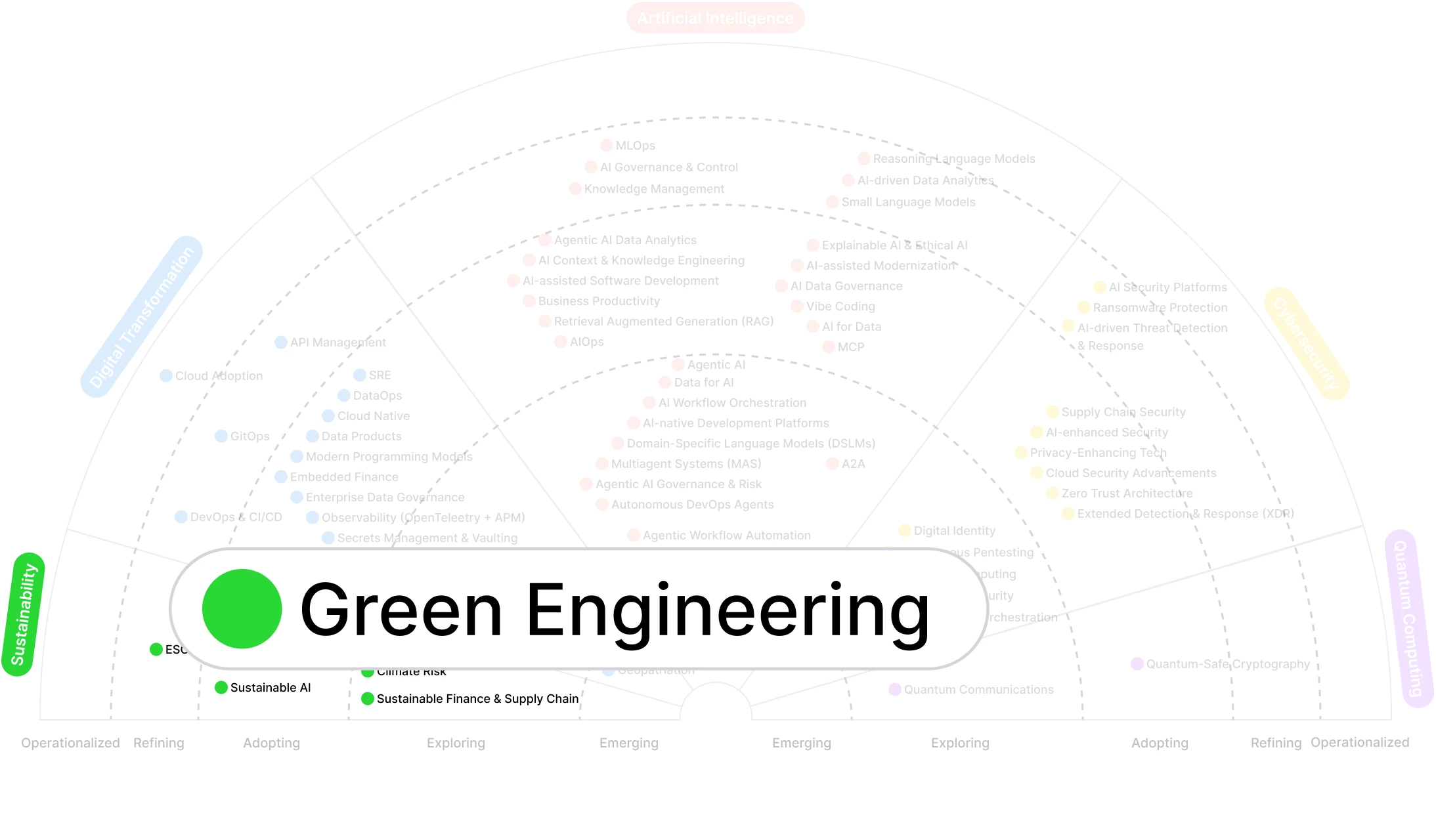

Green engineering in software focuses on designing and implementing systems to minimize energy consumption and environmental impact, without sacrificing performance. This includes choices of languages, runtimes, algorithms, hardware and deployment patterns that deliver the same (or better) business outcomes with less compute and power.

Why It Matters in 2026

Paradoxically, just as interest in green engineering was rising, generative AI’s boom, driven by GPU-intensive workloads, pulled attention away:

Yet the underlying pressures are not going away:

Where Momentum Is Showing Up

Despite overall neglect, we see meaningful activity in several areas:

At the frontier, research into neuromorphic computing and analog AI chips suggests the possibility of orders-of-magnitude energy reductions, for example, chips capable of trillions of operations per second at a few watts instead of hundreds or thousands. Investment and availability, however, remain limited.

Why Adoption Is Still Limited

Perspective from our experts:

In short, the motivation is cost, not climate, but the technical choices that save money are often the same ones that reduce energy.

Regional Nuances

Key Uncertainties

Big Questions for Leaders

Synechron Vantage Point

We expect green engineering to re-emerge as a visible board-level topic once AI experimentation normalizes and energy becomes a more explicit constraint.

The last two years were about testing possibilities. 2026 will be about turning those possibilities into durable outcomes. The institutions that lead will no longer chase isolated trends. They will weave AI, cloud, security, sustainability, and cryptography into a single, coherent technology fabric.

Agentic AI without clean data and secure workflows will stall. Cloud-native modernization without AI-ready architecture will under-deliver. AI security without security for AI will leave core risks unmanaged. And AI at scale without green engineering will collide with energy, cost, and policy constraints.

The winners will be those who connect ambition with execution discipline, who treat architecture, governance and talent as strategic levers.

At Synechron, we believe the next era is about engineering trust, resilience and advantage at scale. The question for 2026 is not “What can AI do?” but “What can your organization achieve when every technology decision compounds into lasting value?”